Autonomous vehicles can’t move safely without knowing their exact position-yet ordinary GPS, with its 1–3 m error, is far too coarse for lane-level decisions. In urban canyons or tunnels, signals vanish completely, leaving the system “blind.” To achieve centimeter-level accuracy, modern self-driving platforms rely on multi-sensor fusion, combining GNSS, IMU, LiDAR, and visual perception to ensure continuous, reliable localization under every driving condition.

Autonomous vehicles achieve centimeter-level accuracy through GNSS/INS integration, LiDAR mapping, and visual perception, with high-precision IMUs forming the core of localization when GPS signals fail.

Localization is the unseen backbone of autonomous driving. It tells a vehicle exactly where it is, even when satellites disappear. By fusing IMU, GNSS, LiDAR, and camera data, modern systems maintain precise positioning across every road, tunnel, and urban canyon.

Table of contents

The Core Technologies Behind Autonomous Localization

Modern autonomous driving localization combines four major sensing technologies, each serving a unique role in perception and navigation:

- GNSS (Global Navigation Satellite System):Provides global coordinates and absolute position reference.

- INS (Inertial Navigation System):Tracks motion through accelerometers and gyroscopes, bridging gaps when GNSS signals drop.

- LiDAR (Light Detection and Ranging):Generates 3D environmental maps for centimeter-level spatial matching.

- Vision systems:Use cameras to identify lane markings, traffic signs, and landmarks for semantic understanding.

Together, these systems create a redundant, complementary architecture that ensures precise localization under complex road, weather, and lighting conditions. However, among these, GNSS remains the starting point — and its limitations reveal why fusion is essential.

Why GNSS Alone Can’t Make Cars Autonomous

Standard GPS offers only 1–3 m accuracy — fine for phones, but fatal for self-driving cars. Even a small drift could push a vehicle across lane lines or misread a turn.

In urban canyons or tunnels, signals bounce or vanish entirely, creating erratic jumps known as multipath errors. These gaps make pure GPS unreliable, proving that autonomous systems need sensor fusion for continuous, centimeter-level localization.

Enhancing GNSS Accuracy for Autonomous Driving

To improve standard GPS performance, autonomous systems use enhanced GNSS correction methods such as RTK. By receiving real-time error data from reference stations, these systems can reach 5–10 cm accuracy, enabling lane-level positioning.

However, GNSS still relies on clear-sky visibility and stable communication. In tunnels or urban areas, signals degrade or disappear, proving that satellite positioning alone is insufficient and must be supported by INS for continuous localization.

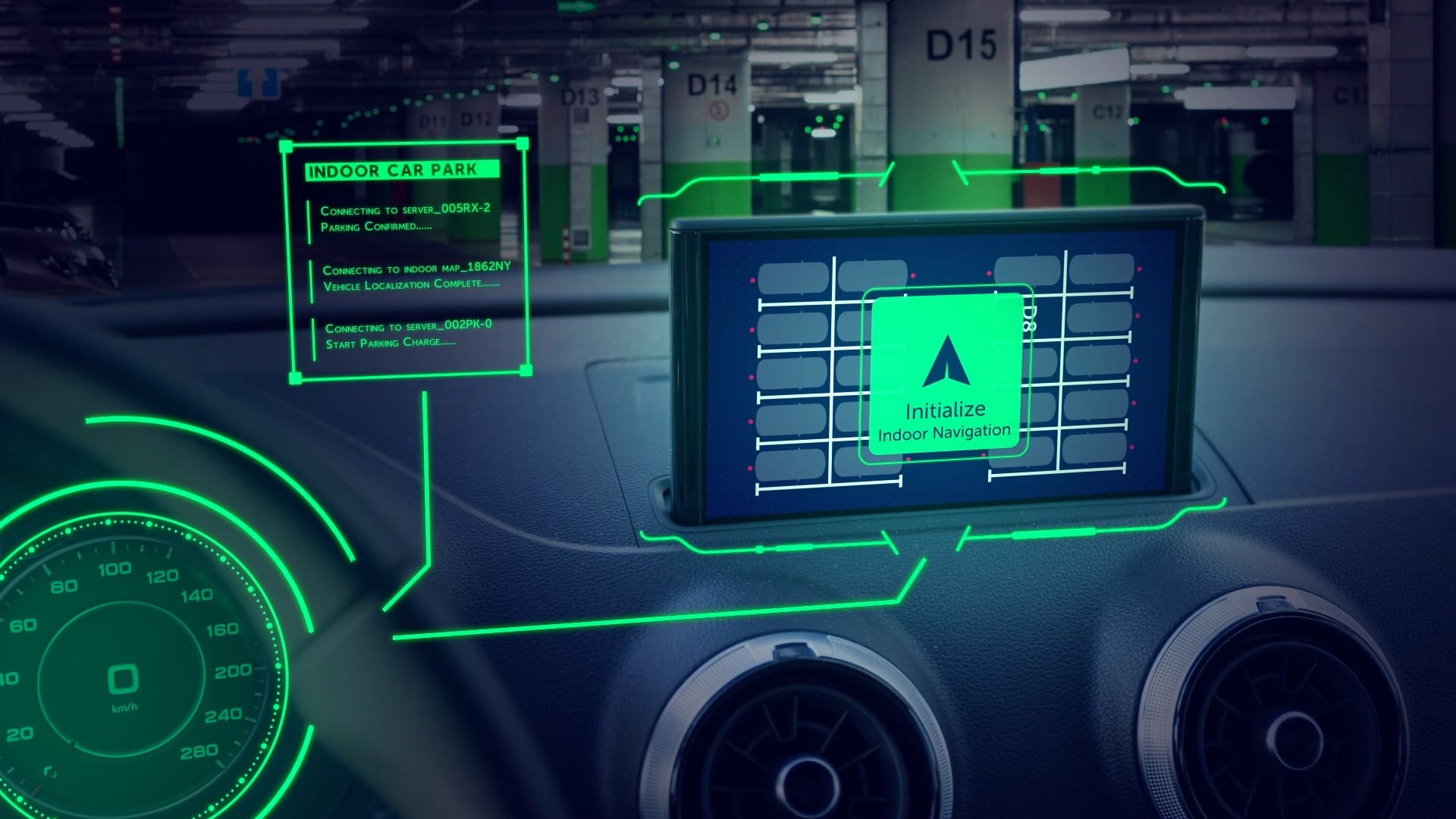

When GNSS Fails, INS Keeps the Vehicle Aware

When satellites disappear, the Inertial Navigation System (INS) takes control. Using an onboard Inertial Measurement Unit (IMU), it tracks motion through acceleration and angular rate — allowing the vehicle to “dead-reckon” its position even without external signals.

This independence makes INS the bridge through tunnels, underpasses, or urban canyons. However, errors accumulate over time, causing gradual drift. To stay precise, the INS must be continuously corrected by GNSS or other sensors like LiDAR and cameras.

Inside the INS: The Core of Inertial Localization

The Inertial Navigation System (INS) is the heart of autonomous localization — a self-contained navigation solution that allows vehicles to track their position, velocity, and attitude even when external signals disappear.

What makes up an INS?

- A set of three gyroscopesmeasures angular rate to capture rotational motion.

- A set of three accelerometersmeasures linear acceleration across all axes.

- Together, these sensors form the Inertial Measurement Unit (IMU), which continuously detects every subtle movement of the vehicle in real time.

How the INS works

By integrating IMU measurements, the INS calculates the vehicle’s attitude, velocity, and position relative to an initial reference. Even when GNSS signals vanish, the system continues to estimate motion through dead-reckoning, providing smooth and uninterrupted localization output.

Why INS quality matters

The accuracy of an INS depends on sensor performance — including bias stability, angle random walk (ARW), and temperature compensation. A high-grade INS maintains sub-meter precision for extended periods in GNSS-denied environments, ensuring consistent navigation through tunnels, cities, and covered routes.

INS and GNSS Fusion: The Industry’s Gold Standard

Complementary strengths

Neither system can operate perfectly alone.

GNSS provides global positioning but loses reliability under signal obstruction, while the INS offers continuous motion tracking that gradually drifts over time.

By combining both, autonomous vehicles achieve absolute accuracy and short-term stability.

Fusion in action

Through advanced Kalman filtering, data from GNSS and INS are fused in real time:

- When GNSS is available, it continuously corrects INS drift.

- When GNSS is lost, the INS maintains accurate motion updates until satellite signals return.

This predict–update cycle ensures seamless, reliable localization — even in tunnels or dense urban canyons.

Industry standard

This integration has become the cornerstone of modern autonomous navigation.

A well-calibrated GNSS/INS system delivers centimeter-level accuracy in dynamic conditions, guaranteeing both precision and reliability across all driving environments.

From Coordinates to Reality: How Localization Mathematics Works

Accurate localization depends on converting data between two coordinate frames — the vehicle frame, which moves with the car, and the map frame, fixed to Earth. Using rotation matrices or quaternions, sensor data like acceleration and angular rate are transformed into a global reference. Without this alignment, even precise sensors would misread motion, causing drift and positional error.

LiDAR’s Role in Centimeter-Level Accuracy

When GNSS loses visibility, LiDAR takes over.

Unlike satellite-based positioning, LiDAR localization uses real-time 3D scanning to “see” the environment around the vehicle.

How it works:

- The LiDAR continuously emits laser pulses to create point clouds.

- These scans are matched against a high-definition mapusing algorithms such as ICP (Iterative Closest Point) or NDT (Normal Distribution Transform).

- The system minimizes spatial error to estimate the vehicle’s position and orientationwith centimeter-level accuracy.

Why it matters:

This technique ensures consistent localization in tunnels, urban canyons, or forested roads, where GNSS signals are unreliable or completely lost.

Seeing Is Believing: Visual Localization and Lane-Level Mapping

As autonomous driving systems evolve, visual localization has become an indispensable complement to GNSS and LiDAR. While satellites provide global reach and LiDAR ensures geometric precision, cameras add context and meaning — enabling vehicles to interpret their surroundings the way humans do.

Role of vision in localization

Visual sensors bring semantic understanding to autonomous navigation. Cameras capture lane markings, traffic signs, and road boundaries, providing rich context beyond geometry.

Core process

Captured images are compared against a high-definition map. Algorithms analyze visual features and apply probabilistic estimation (such as particle filtering) to determine the most likely vehicle position.

Main advantage

Unlike LiDAR, which measures shape, visual localization interprets meaning. It enables lane-level accuracy and enhances robustness under changing lighting, traffic, or weather conditions.

What Challenges Still Limit Real-World Localization

Even with advanced fusion of GNSS, IMU, LiDAR, and vision, real-world localization still faces major practical hurdles. Perfect accuracy in a lab doesn’t always translate to unpredictable roads.

Dynamic environments

Road construction, parked vehicles, pedestrians, and temporary objects create mismatches between live sensor data and prebuilt maps. These inconsistencies can lead to short-term positioning errors.

Map maintenance

High-definition maps require constant updates. Seasonal changes, vegetation growth, or minor road layout adjustments can degrade localization accuracy if not reflected in the database.

Sensor calibration

Accurate fusion depends on precise alignment among LiDARs, cameras, and IMUs. Even millimeter-scale mechanical shifts or thermal expansion can cause angular misalignment, introducing cumulative drift over time.

Why IMU Quality Defines the Future of Autonomous Navigation

The Inertial Measurement Unit (IMU) is the final safeguard of autonomous localization. When GNSS and vision lose reliability, it alone keeps the vehicle aware of its true motion. The stability of any self-driving system depends on how precisely the IMU can measure and maintain orientation over time.

High-end IMUs stand apart through bias stability, angle random walk (ARW), and thermal compensation. These parameters define how long the system can sustain accurate dead-reckoning without external correction. While consumer-grade sensors may drift meters within seconds, tactical-grade IMUs can hold sub-meter accuracy for several minutes — the difference between navigation and confusion.

As autonomy advances, the IMU remains its foundation. Future systems will integrate inertial sensing with AI-driven fusion and adaptive modeling, but true reliability will still come from one core principle: the quality of motion sensing defines the confidence of navigation.